Nov . 14, 2024 05:35 Back to list

vae rdp

Exploring Variational Autoencoders (VAE) and their Role in Reinforcement Learning and Decision Processes (RDP)

In recent years, machine learning has made remarkable strides, particularly in the fields of both computer vision and natural language processing. Among the various models that have emerged, Variational Autoencoders (VAEs) stand out due to their unique approach to unsupervised learning and generative modeling. As we delve into the relationship between VAEs and Reinforcement Learning (RL) Decision Processes (RDP), we uncover a powerful synergy that enhances AI's ability to learn from complex environments.

What are Variational Autoencoders?

Variational Autoencoders are a type of generative model that aim to learn continuous representations of data. They consist of two main components an encoder, which maps input data into a lower-dimensional latent space, and a decoder, which reconstructs the original data from this latent representation. The 'variational' aspect comes from the application of variational inference, a technique used to approximate complex probability distributions. The goal is to maximize the likelihood of the data while ensuring that the learned latent space follows a predetermined distribution, typically a Gaussian.

The beauty of VAEs lies in their ability to generate new data points that are similar to the training data. This capability makes them particularly valuable in scenarios where acquiring labeled data is challenging or expensive.

Reinforcement Learning and Decision Processes

Reinforcement Learning (RL) is a paradigm where an agent learns to make decisions by interacting with its environment. The agent receives feedback in the form of rewards and uses this information to refine its behavior over time. A key element of RL is the concept of a decision process, which describes how an agent transitions between states based on its actions and the subsequent rewards received.

The integration of VAEs into RL can significantly enhance the agent's ability to represent and understand the environment. By utilizing a VAE, agents can learn a more informative latent representation of the state space, allowing for better decision-making policies.

The Synergy Between VAEs and RDP

vae rdp

The combination of VAEs and Reinforcement Learning opens new avenues for improving the efficiency and effectiveness of decision-making processes. Here are several key benefits of this synergy

1. Dimensionality Reduction VAEs help in reducing the dimensionality of the state space. This is particularly valuable in high-dimensional environments where traditional RL methods may struggle due to the curse of dimensionality. By focusing on the latent space, agents can learn to prioritize relevant features, thereby simplifying the learning process.

2. Generative Capabilities VAEs can generate synthetic states that are statistically similar to the actual states encountered during training. This capability allows agents to explore unvisited areas of the state space, potentially discovering new strategies and improving their performance in various tasks.

3. Data Efficiency The generative nature of VAEs facilitates data efficiency. By learning a model of the environment, an agent can simulate interactions and learn from those simulations instead of relying solely on real experiences, which can be limited.

4. Continuous Action Spaces In RL, scenarios with continuous action spaces can be challenging. VAEs can be used to learn a continuous representation of the actions, contributing to more fluid and nuanced decision-making.

5. Improved Exploration VAEs can enhance the exploration strategies of RL agents by providing them with a better understanding of the uncertainties within the environment. By exploring the learned latent space, agents can make more informed decisions about where to probe next.

Conclusion

The intersection of Variational Autoencoders and Reinforcement Learning Decision Processes offers a promising path for advanced AI techniques. By harnessing the power of VAEs to learn robust and informative representations, RL agents can improve their decision-making capabilities, navigate complex environments more effectively, and exploit data more efficiently. As research continues in this domain, we can expect significant advancements that may redefine our understanding of autonomy in artificial intelligence, leading to applications across various fields, from robotics to game development and beyond. The future holds exciting possibilities as we explore the depth of what VAEs can offer to enhance decision-making in reinforcement learning contexts.

-

Versatile Hpmc Uses in Different Industries

NewsJun.19,2025

-

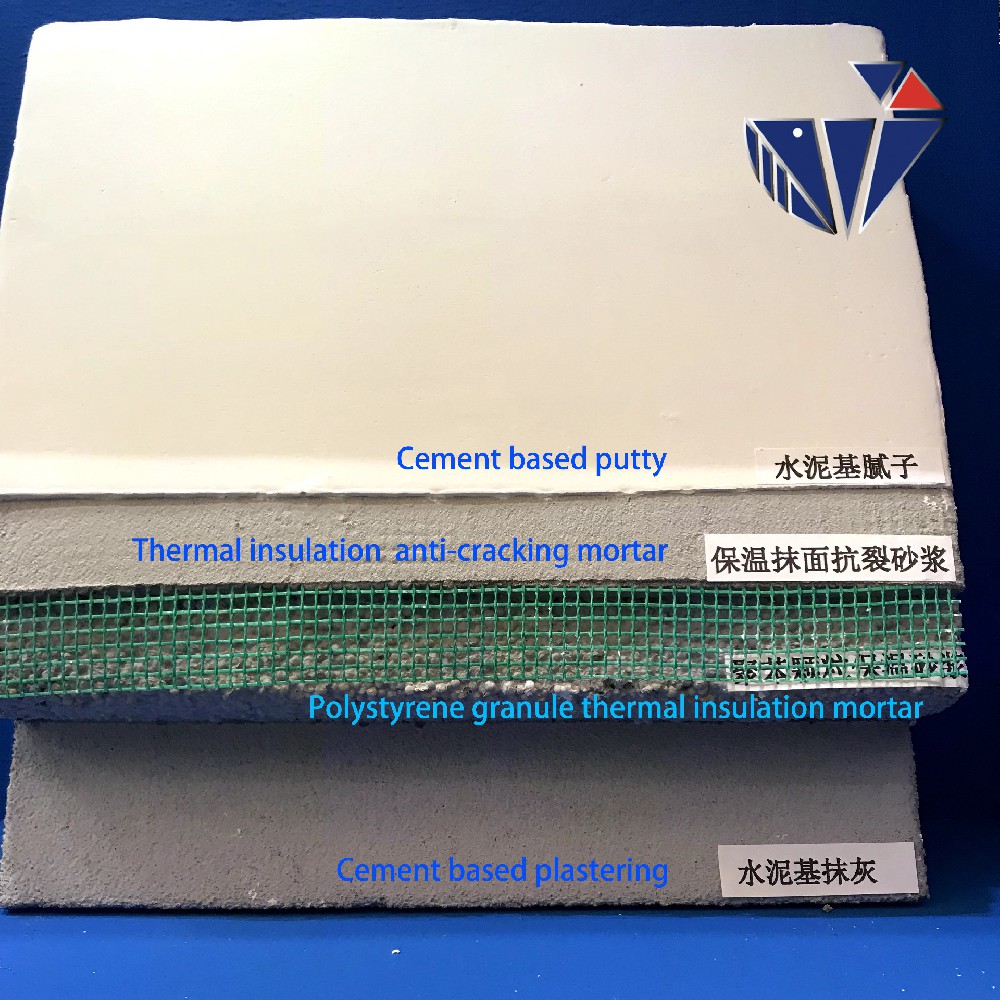

Redispersible Powder's Role in Enhancing Durability of Construction Products

NewsJun.19,2025

-

Hydroxyethyl Cellulose Applications Driving Green Industrial Processes

NewsJun.19,2025

-

Exploring Different Redispersible Polymer Powder

NewsJun.19,2025

-

Choosing the Right Mortar Bonding Agent

NewsJun.19,2025

-

Applications and Significance of China Hpmc in Modern Industries

NewsJun.19,2025